Author C. J. Cherryh is one of the last great living masters of science fiction, easily on a par with Clarke, Herbert, or Wolfe. Her strength is in building worlds populated with believable humans and non-humans, and then writing those characters in such a way that the reader ends up deeply empathizing with them—even the most alien of aliens.

Her best-known works are the long-running Alliance-Union novels, which taken together describe a war-filled future history epic of the expansion of humankind off of Earth and into the rest of the galaxy. However, for the past couple of decades Cherryh has been focusing on a different series altogether: the Foreigner books.

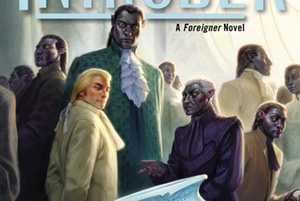

The series (starting with Foreigner) tells the tale of a lost human colony ship forced to take permanent refuge at far-off world populated by heretofore undiscovered aliens: the three-meter tall black-skinned atevi. Atevi don’t experience the same emotions as humans and have an innate perception of numbers that’s described as roughly analogous to the human perception of color. Humanity and atevi are similar enough that they quickly establish cordial relations, and different enough that war is inevitable.

But I’m not going to do a whole series recap—we’d be here forever, since the series at this point consists of 19 books with at least two more to come. Instead, I want to focus on a very touchy subject, and one about which readers of the books will no doubt have very spiky feelings: pronunciation of names and places.

N.B. Folks who haven’t read at least one Foreigner book should probably bail on this entry, because this post probably isn’t going to be super-interesting unless you’ve already got some Ragi words bouncing around in your head.