Nginx and I have a long history. BigDinosaur.org first went online some time in 2010 with a little Apache-powered homepage, and it didn’t take long for me to switch over to Nginx—probably to be contrary, more than anything else, because Nginx was a fascinating underdog that was steadily winning web server market share with its speed and flexibility. I liked it. I blogged about it. A lot. I thought I’d found a piece of software I could live with forever.

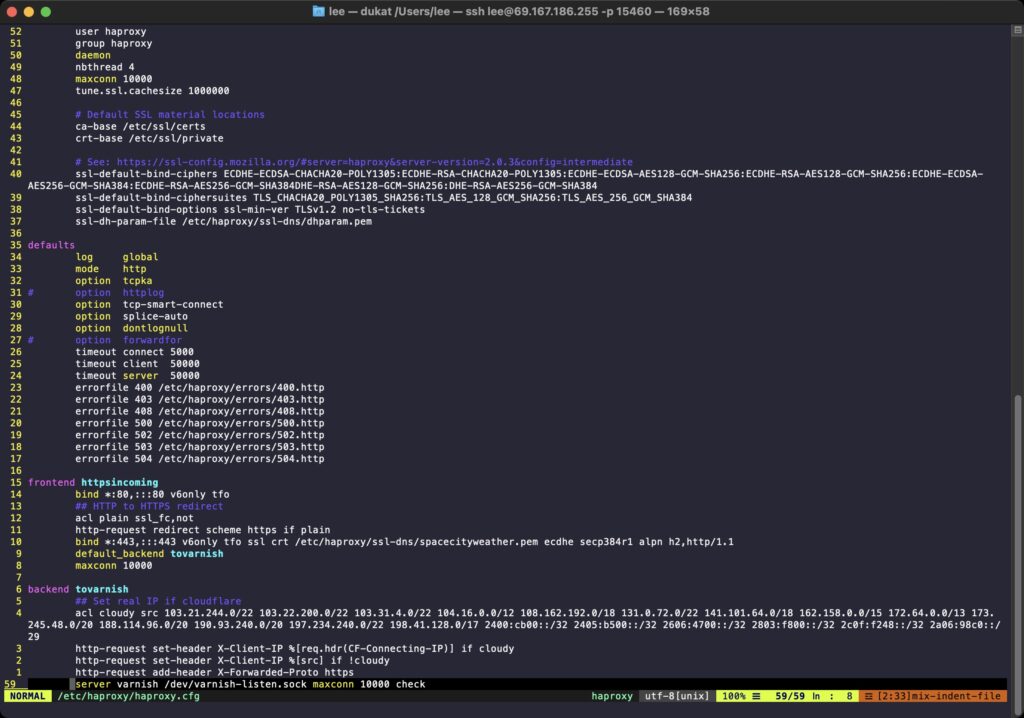

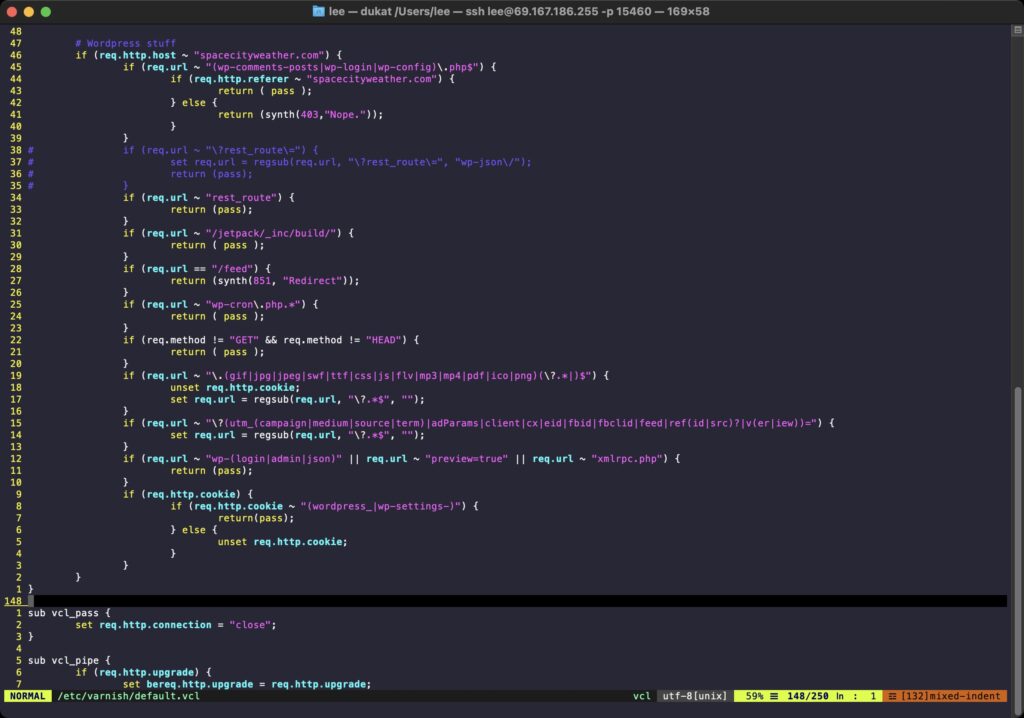

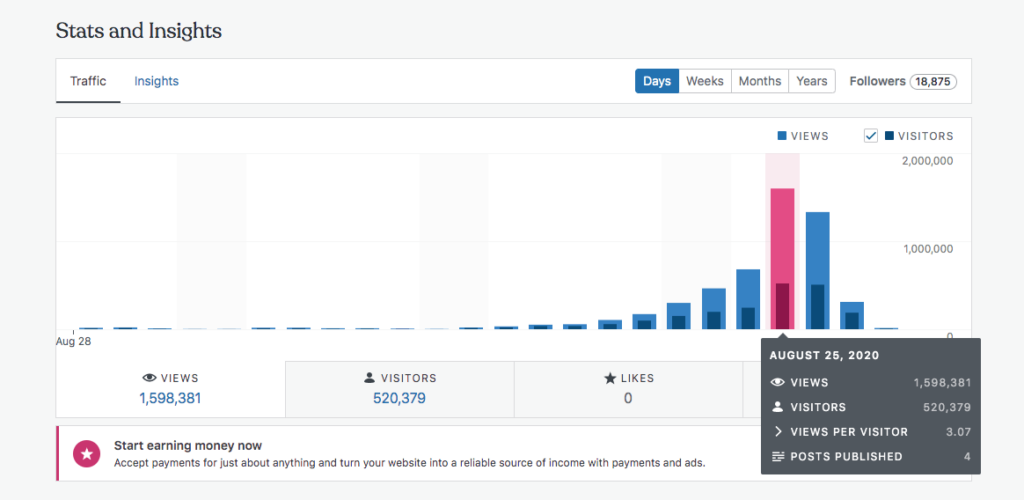

And don’t get me wrong—Nginx is great. But in the decade since 2010, my web hosting ambitions have grown and I’ve incurred a lot of technical debt. Nginx gave way to Nginx and Varnish, and then after the HTTPS revolution happened, Nginx and Varnish and HAProxy. For a time, things were good—Varnish is maybe a little heavy-duty for my needs, but I appreciated the crazy stuff it let me do with very fast redirects and screwing around with cookies. It made hosting Wordpress a little nutty, but my hosting strategies were working out well where I was applying them under real load over Space City Weather, which weathered a peak load of 1.5 million pageviews in a single day during Hurricane Laura’s near-miss of the Houston area in 2020.

But as bulletproof as the Haproxy-Varnish-Nginx stack was, as the years wore on and things evolved, it grew to be kind of a pain in the butt to maintain—especially when mixed with Cloudflare on a few of the sites I maintain. Troubleshooting issues while dealing with both a caching CDN (Cloudflare) and a local cache layer (Varnish) sometimes caused me to pull my hair out. And after a decade on the same hosting stack, I was growing curious about some of the newer options out there. Was there something I could use to host my stuff that might give around the same level of performance, but without the complexity? Could I ditch my triple application stack sandwich for something simpler?

Looking to OpenLiteSpeed

OpenLiteSpeed is the libre version of LiteSpeed Enterprise, a fancy web server with a whole bucketload of features—including native HTTP/3 support. It’s got a reputation for being very quick, especially at WordPress hosting, and part of that quickness comes from its built-in cache functionality. There are lots of benchmarks claiming that OLS’ native cache is more performant than either Varnish or Nginx’s FastCGI cache, and there’s a Wordpress plugin that automates a lot of the cache management tasks.

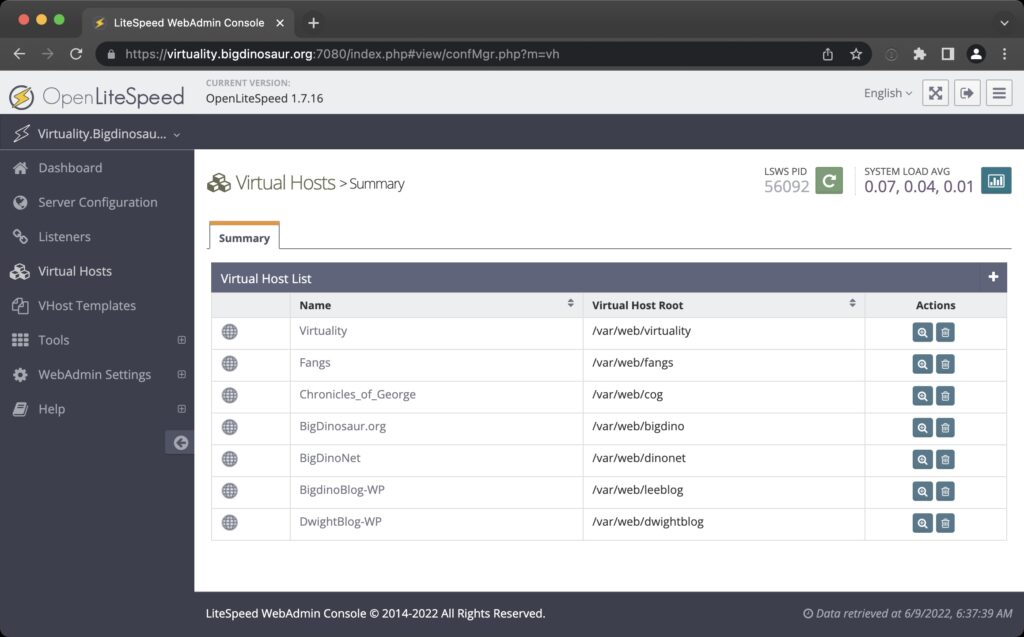

Those things were enough to get me interested, and I’ll be the first to admit that I make tech decisions mostly based on whims, so I started thinking about making the jump. Moving my personal sites would be easy—BigDinosaur.org, Fangs, and the Chronicles of George are all static sites, so they’d be easy. This blog and Dwight’s blog would provide good practice with configuring Wordpress and PHP on OpenLiteSpeed.

The two bits I was worried about were the BigDino/CoG Discourse forums, and Eric Berger’s Space City Weather—the former because Discourse can be a cantankerous beast, and the latter because SCW is an important site that hundreds of thousands of people in the Houston area rely on every day for weather information (especially during hurricane season). I could screw up migrating my own stuff, but SCW had to be done properly and with minimal downtime.

Cloud time

On top of that, I was looking to save myself some hosting money. Since late 2017, I’ve been paying for a dedicated server at Liquid Web for my own use, and while it’s been incredibly freeing to have as many web resources as I could ever need, it was also costing about $2,000 a year. Looking at my actual requirements in terms of RAM, CPU, storage, and bandwidth, it became pretty clear that in spite of Liquid Web’s extremely reasonable dedicated server pricing, keeping the box was like lighting money on fire.

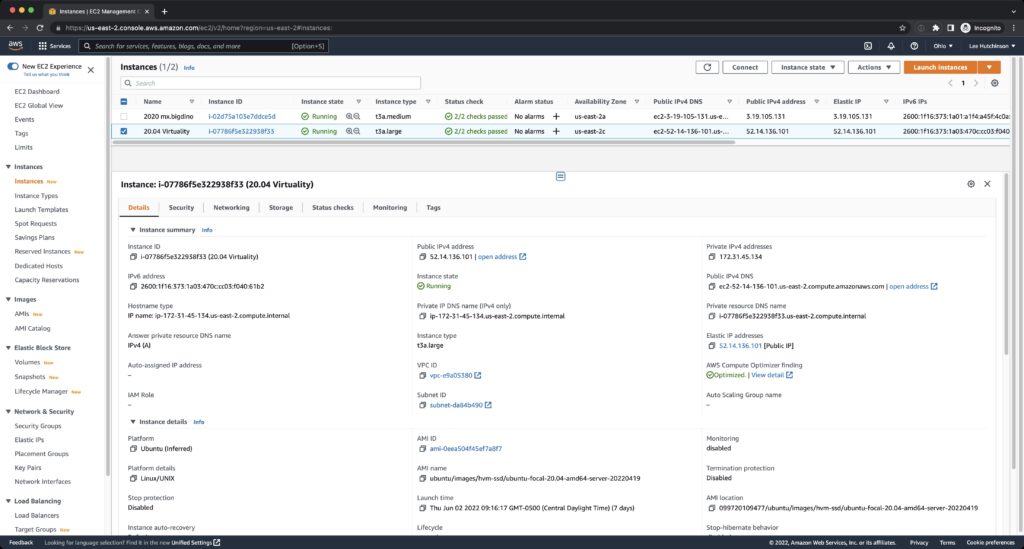

AWS beckoned. I was already using an AWS EC2 t3a.medium instance to run the BigDinosaur.org e-mail server; I’d picked AWS for that because, perhaps surprisingly, the cost proved pretty reasonable. You can prepay for what Amazon calls a “reserved instance,” and a three-year prepayment for the t3a.medium-sized mail server was only $350 or so. I’d need to step up to a t3a.large instance to get 8GB of RAM—definitely necessary in a cache-heavy configuration like I wanted to run—but the pricing stayed pretty reasonable.

I think EC2 gets somewhat of a bad rap among non-business hosters, in terms of both complexity and costs, but I quite like it. It is overly complex, but you can ignore most of it and just focus on the parts that you need. With reserved instance pricing being so downright reasonable, it seemed like the time to make the switch from physical to cloud hosting.

Moving day

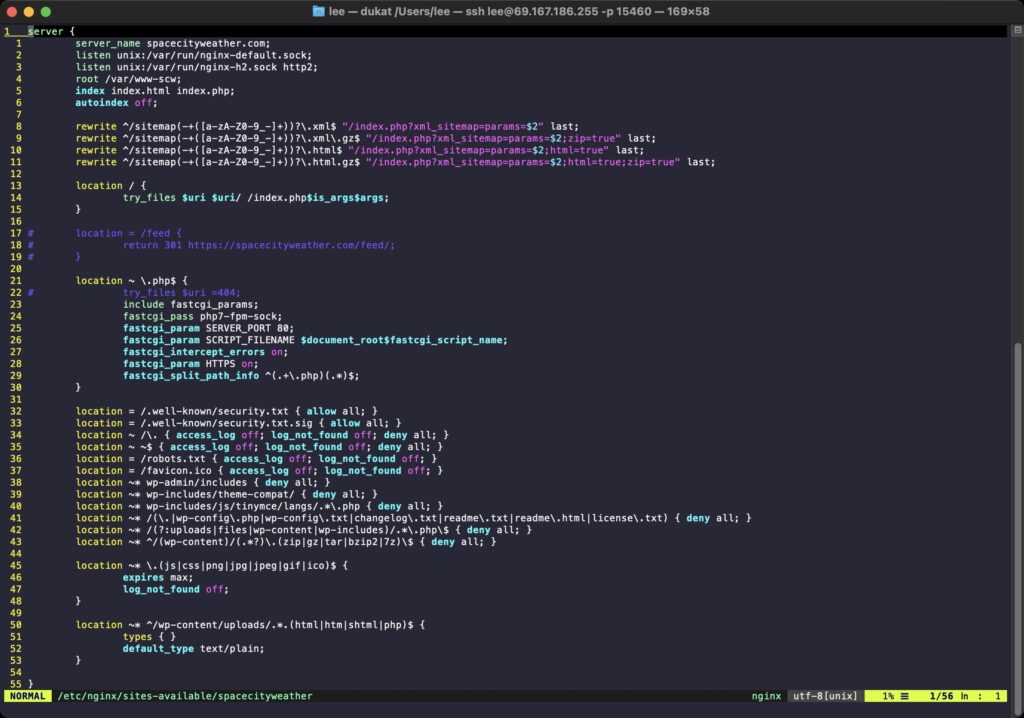

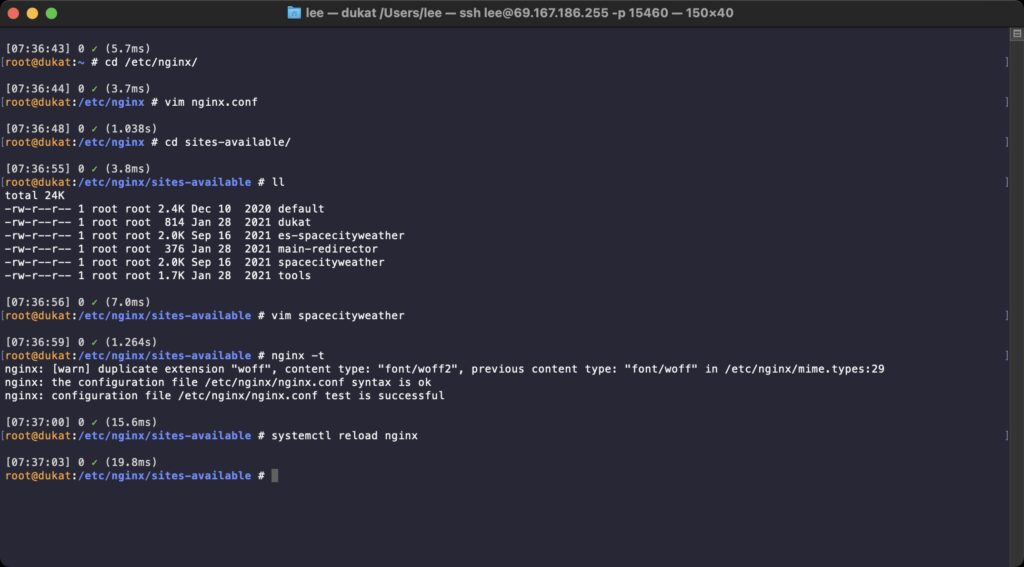

I ended up doing the migrations spread over a full weekend. The biggest issue for me was getting my brain out of Nginx mode and forcing it into Apache mode, since OpenLiteSpeed uses Apache-style .htaccess files and Apache-style rewrites. Nginx ignores .htaccess and uses config files with a vastly different syntax and options, so it took quite a bit of googling for examples before I figured out all of the steps necessary to recreate equivalent hosting setups for each of the sites.

An aspect of OLS that I initially intensely disliked is its web configuration console. You can configure OLS by manually editing config files, but the preferred way to do OLS admin tasks is through the GUI. This is a pretty substantial change.

I’m still not a hundred percent on board with the GUI configuration, and I wish there was an easier way to clone an existing vhost as a template, but it’s also a testament to OLS’ functionality that I was able to get almost everything migrated over in a weekend.

Space City Weather took a little longer, but it too is now running on OLS. The only thing that didn’t quite make the jump was the BigDino Discourse instance, which I shoved onto a spare EC2 reserved instance and fronted with good ol’ Nginx as a reverse proxy, because that’s pretty easy and at that point I was tired of difficult things.

And so here we are, with our new OLS-based stack. It’s been a few days and nothing’s caught on fire yet, so that’s pretty good. In a lot of ways I’m going to miss HAProxy and Varnish and Nginx; in the ten-plus years I’ve been using that stack, I’ve gotten very familiar with wandering through those applications’ config files. I’ve lived with those programs and found out some neat tricks. Letting go of that makes me feel surprisingly wistful—it’s a good stack, though the complexity of managing new things was just getting out of hand.

Hopefully OLS will keep me busy for another ten years.