This is an old post. It may contain broken links and outdated information.

Web site performance has been on my mind a lot lately. An average day for this blog means serving only a few hundred visitors and maybe 4-500 page views, but bigdinosaur.org also hosts the Chronicles of George, which carries with it a much higher average load; on days when a link hits Reddit or a popular Facebook page, the CoG can clock 10-12,000 pageviews. This is still small potatoes compared to a truly popular site, but it pays to be prepared for anything and everything. Setting up a web server to be as fast as possible is good practice for the day it gets linked to from Daring Fireball or Slashdot, and even if that day never comes, there’s nothing wrong with having an efficient and powerful setup which can dish out a huge amount of both static and dynamic content.

So in the course of some casual perusing for Nginx optimizations, I happened across Blitz.io, a Heroku-based site which gives you the ability to load-test your web server. I was immediately intrigued; I’ve done load testing on my LAN before using Siege and Apachebench; LAN-based testing is useful to a point, but it won’t help you to understand the over-the-net performance of your web site. Blitz.io fills a gaping hole in web site testing, letting you observe how your site reacts under load in real-world conditions. I was intrigued and I signed up, and I ended up killing several hours with testing, mitigation, and re-testing. The results were unexpected and incredibly valuable.

A useful service

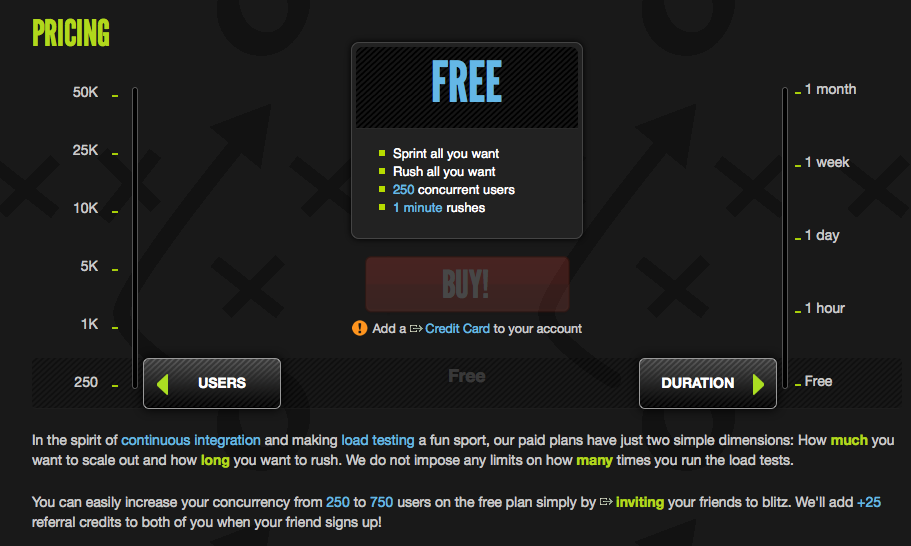

Blitz.io offers an entire series of test regimes. For casual users like me, there is a free tier of services, enabling you to apply a load test (or “rush,” as they call it) of up to 250 concurrent users for up to 60 seconds. You’re allowed to perform rushes at the fee tier as often as you like, and the test is tracked in real time and the results are presented in an very clear and readable format. The number of concurrent connections can scale up to 50,000, with tests as long as 20 minutes; to “rush” any higher than the default tier, you need to pay. This makes sense, since rushes involve a substantial amount of horsepower and bandwidth which must be brought to bear; if you’re bringing a large commercial site online and need to see how it reacts under a monstrous load, the ability to engage Blitz.io to immediately test your site without having to coordinate a simulated test environment could be invaluable.

But for my small site, the free tier was perfect. Without any idea what to expect, I made sure that Blitz was authorized to rush my site (which is done by placing a specifically-named text file in your site’s root directory), and hit the “PLAY” button.

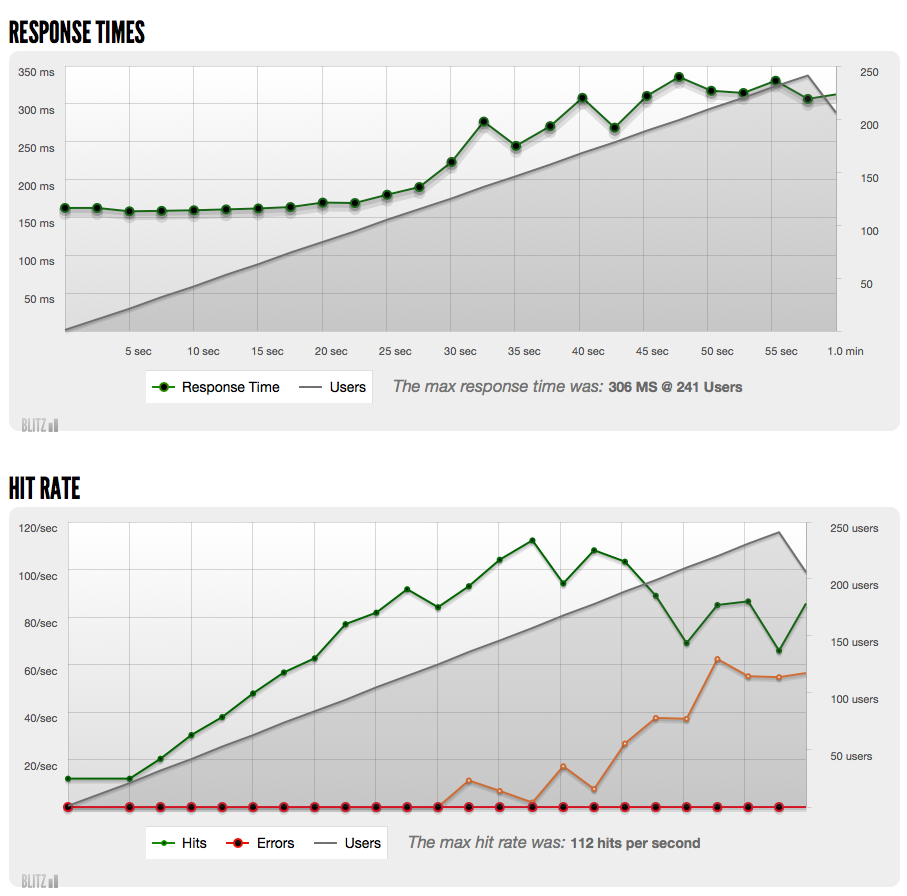

In its default form, Blitz starts testing with a single connection, and quickly ramps up to the max of 250 simulated users across the span of your 60 seconds. The front page of bigdinosaur.org started out just fine and held its own up to about 100 concurrent users, but then Blitz began to report that its connection attempts were being met with timeouts; worse, the latency of the connetions began to spike, going from about 150ms up to 3-400ms. The test quickly sputtered out, showing that as the number of simultaneous connections mounted, the bigdino web server began to struggle to keep up, eventually abandoning most of the HTTP requests and letting them time out.

“Ah HA!” I thought. “THIS is the analysis I’ve needed to run all along! TIME TO INSTALL VARNISH!”

Varnish isn’t just for your deck

Varnish is a powerful HTTP cache used by a huge number of web sites. It’s fast and extremely efficient, and can enable your web site to serve a truly ridiculous amount of content with very little CPU utilization. Varnish runs as a proxy between your end users and your web server, receiving requests from users and servicing most requests out of its cache, while passing along requests for dynamic content to the actual web server behind it. For a site with lots of unchanging or slowly-changing content, Varnish can multiply the amount of pages the web site is capable of serving by a tremendous factor.

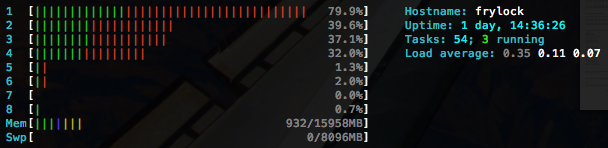

Bigdinosaur.org runs Nginx, and Nginx is already a pretty efficient web server. Still, there’s always room for improvement, and Varnish can make a huge difference. For example, here’s the Bigdino web server when I hit it with Siege on the LAN, simulating 1000 concurrent connections:

The web server has a quad-core i7 with hyperthreading, and all four Nginx worker processes are involved in handling the load. There’s obviously still plenty of breathing space left, but the CPU is definitely having to flex its muscles to keep up.

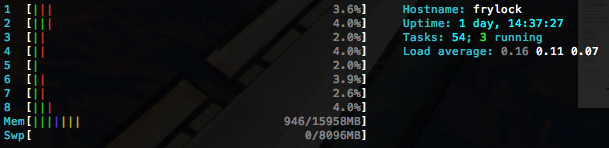

Here’s the same workload, but run through Varnish:

Same benchmark with the same tool, except this time Varnish is serving the responses directly out of its inbuilt cache in RAM, without having to make any file system calls or really even bother the web server worker processes at all. Even for a fast web server like Nginx, the benefits are obvious. With a web application like Wordpress which relies heavily on generated pages, tossing Varnish into the mix to serve the front page and various blog pages as static content can bump the traffic your site can handle up by a factor of a thousand. This is huge.

Re-benching with Varnish

I couldn’t shake some obvious doubts about the benchmark results, though. Nginx appeared to be able to handle the LAN-based Siege benchmark at 1000 concurrent connections without any problems at all; why was it failing at only 10% of that load when I rushed the site with Blitz.io? What was I missing? Did the added latency of the Internet have that much of an impact on the number of requests served? Obviously latency will have some impact, but it shouldn’t have that much of one…should it?

With Varnish in place, I rushed my site again with Blitz, using the same test parameters as before. The results weren’t what I was expecting.

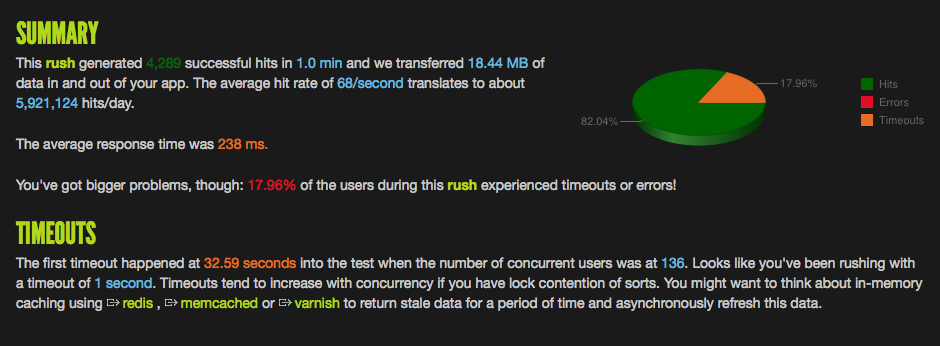

Things had improved slightly over not having Varnish in place, but damned if the site still didn’t fall on its butt after a certain point, dropping connections like mad. The rush summary held a bit more information:

According to the summary, at 136 simultaneous connections, the web server started responding to some requests with timeouts. The frequency of the timeouts increased rapidly as the concurrency ramped, with latency climbing at the same time.

“What the hell is going on?” he asked. “We keep getting kicked out of Minecraft!”

This was profoundly disheartening. I went back and reviewed my Varnish configuration and logs, convinced that I must be missing something. I spent probably an hour testing and re-testing, both with subsequent rushes and also with more LAN-based benchmarking, and the results always seemed to be the same: at a certain concurrency level, rushes always began to receive ever-increasing timeout responses instead of getting the site’s content. This likely would have gone on forever if I hadn’t recieved a frantic instant message from one of the players on the Bigdino Minecraft server: “What the hell is going on?” he asked. “We keep getting kicked out of Minecraft!”

I stared at for a few seconds, and then a light went on in my head like a supernova exploding. The web server was working fine. My Internet connection was the problem!

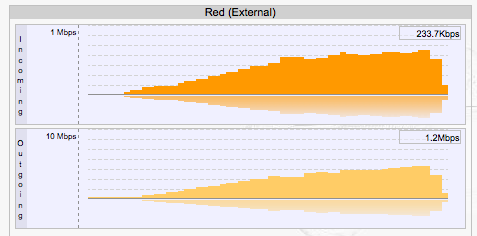

Armed with this revelation, I ran the rush again, this time while logged into bigdino’s Smoothwall Linux firewall & router, and as the rush unfolded, I watched the outbound network traffic climb and climb, soaring past my Comcast Business Class connection’s 2Mbps upload limit and flutter valiantly at 5Mbps for several seconds before collapsing in exhaustion at the end of the test:

Every time I’d been rushing over the past hour, I’d been sucking up every single availble bit of upstream bandwidth that Comcast allowed my connection to have; the point of saturation occurred right at 130-ish concurrent connections, at which point the amount of bandwidth required to serve the Bigdinosaur.org front page at more than 100 times a second overwhelmed the network connection’s available bandwidth. As an added bonus, the folks playing Minecraft on the Bigdino server, which obviously shares the same connection, were getting kicked because the Minecraft server was begin similarly starved for connectivity.

D’oh!

It was truly a forehead-slapping moment, and one which brought to light the tremendous loads under which big sites have to be able to operate. My puny business-class connection isn’t anywhere near up to the task of handling a full-on Slashdotting of yore; even with purely static content coming out of a fast Varnish cache, and even with the server performing gzip compression on the compressable stuff, the sheer bulk of data that must be sent out is just too much.

But, the good news is that I now know exactly how much load my home connection can take. It’s extremely useful data to have, and I will absolutely revisit Blitz.io any time my connection is modified by Comcast to check what the new parameters are. Thanks, Blitz.io!