This is an old post. It may contain broken links and outdated information.

In the previous entry, I touched briefly on how some experimentation with Blitz.io led to me installing Varnish Cache on the Bigdino web server. Varnish Cache is a fast and powerful web accelerator, which works by caching your web site’s content (html and css files, javascript files, images, font files, and whatever else you got) in RAM. It differs from other key-based web cache solutions (like memcache) by not attempting to reinvent the wheel with respect to storing and accessing its cache contents; rather than potentially arguing with its host server’s OS and virtual memory subsystem on what gets to live in RAM and what gets paged out to disk, Varnish Cache relies wholly on the host’s virtual memory subsystem for handling where its cache contents live.

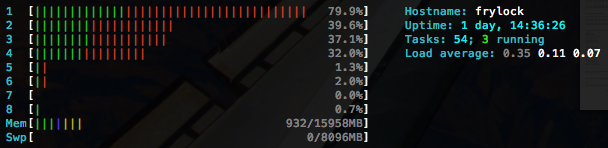

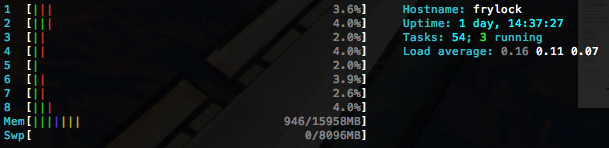

Varnish is able to serve objects out of its cache much faster and using far fewer host resources than a web server application. While deploying it to see how it might help or hinder my Blitz.io runs, I did some brief and extremely unscientific testing on the Bigdino Nginx web server using Siege to simulate 1000 concurrent HTTP connections. When hitting Nginx directly, without Varnish in the middle, the server showed a considerable amount of CPU usage:

Running the same benchmark against the same host but pointing Siege at Varnish’s TCP port instead of Nginx’s yields considerably lower CPU utilization:

In the second instance, Varnish is servicing the HTTP requests directly out of its cache, which is sitting in RAM. Varnish doesn’t have to bother Nginx to have it evaluate the requests and pull things up off of slow disk (even the web server’s fast SSD is glacially slow compared to RAM!); the improvements would be even greater if there were any PHP code to be executed in order to dig up and serve static content.

As might be gleaned from the plethora of “Make X work on Nginx!” blog posts here, Bigdino supports a number of different sites and web applications. There’s The Chronicles of George, which is all static content but which has a forum running Vanilla; there’s a couple of Wordpress blogs; there’s a MediaWiki-based wiki for Minecraft stuff, and then there’s the Bigdino main site and the blog. This is a fair mix of static and dynamic content, but through some trial and error and a whole hell of a lot of Googling, I’ve put together a Varnish configuration which handles all of the sites very well, and I’d like to share.

GIMME THE CACHE!

Varnish works as a reverse-proxy, receiving requests from the Big Bad Internet and either replying to them directly or forwarding them on to one or more backend web servers. In smaller setups like mine, Varnish can live on the same physical server as your web server software; for bigger sites, it can be on a separate server. Multiple physical Varnish servers can work together to load balance incoming requests to multiple backend servers, if needed—Varnish is used in lots of very large sites to help absorb load.

Varnish is perfect if you have a site which consists of no dynamic content; ironically, a site with no dynamic content would probably derive the least amount of benefit from Varnish, since its the dynamic stuff which puts the greatest load on web servers. However, a site made up of all dynamic content is difficult to adapt to Varnish, since the very idea of a web cache involves not regenerating stuff for every request. The trick is figuring out which elements in a web page are dynamic and which are cacheable.

The trick is figuring out which elements in a web page are dynamic and which are cacheable.

Right out of the box, Varnish’s default configuration does a pretty darn good job. Because HTTP sucks for persistent sessions, most sites which require you to log in use cookies to store information about a user being logged in, including their session ID and whatever else needs to be stored. Varnish immediately assumes that any web page elements with cookies are customized per user, and excludes them. This is smart, but some sites have cookies on every page—all my sites, for example, track visitor metrics with Piwik, which sets cookies on every page. This kind of thing can flummox Varnish, and must be worked around.

Further, different web applications can have different rules for what should and shouldn’t be cached; even worse, sometimes things that a web application says shouldn’t be cached might actually benefit from caching. Only experimentation will really tell you what you should and shouldn’t cache.

Varnish also does not deal with HTTPS, because encryption makes it impossible for Varnish to distinguish what the objects are that it is passing back and forth from the user to the backend. There are workarounds (like installing Pound) in front of Varnish and terminating SSL/TLS there, so that Varnish and the backend see only unencrypted traffic, but that’s unnecessarily complex for our small web site. I might fiddle with this in the future, but for this blog post, we’re going to simply exclude HTTPS traffic all together.

All that being said, let’s dive into the config I’m using with Varnish.

Defaults file

With Varnish installed from a repo with aptitude install varnish, there are two files to edit in order to modify Varnish’s behavior. The first is the defaults file under /etc/default/varnish, which lets you set the TCP port Varnish listens on, how much RAM it should allocate for its cache, what config file Varnish loads, and a number of other parameters. I’ve modified my Varnish cache to use 8 GB of RAM, which I don’t think I’ll ever come close to needing, but which I’d like to have preallocated just in case; I’m also running Varnish on TCP port 80 so that it gets all web traffic to all hostnames.

...

DAEMON_OPTS="-a :80 \

-T localhost:6082 \

-f /etc/varnish/default.vcl \

-S /etc/varnish/secret \

-p thread_pool_min=200 \

-p thread_pool_max=4000 \

-p thread_pool_add_delay=2 \

-p session_linger=100 \

-s malloc,8G"

...The section in the defaults file I’ve modified is the DAEMON OPTS section. When it’s first installed, Varnish will run on TCP port 6081 in order to not squash any previously-installed web server, so I’ve changed that and I’ve also changed the -s argument in order to bump the amount of RAM.

VCL file

The rest of the Varnish work is done in the VCL file. VCL stands for “Varnish Configuration Language”, and it’s a C-like set of configuration directives which Varnish translates into C at runtime and executes. You can also append native C code directly into your VCL files, if you need Varnish to do some particular tricks which you can’t quite communicate through VCL, but we’re not going to do anything like that in this example.

The VCL file which Varnish comes with is called defaults.vcl and it contains a commented-out listing of Varnish’s default inbuilt rules. This is great because it allows for study, and the default rules are automatically run appended to any custom rules you end up adding. Best practice would probably be to create a new .vcl file and begin editing that, but I admit to being lazy and just modifying the VCL file directly. I’m all about the lazy way.

The VCL file is divided up into declarations and subroutines, and we’ll look at each one.

Backend declaration

The first bit in the VCL file defines the “backend”, which is Varnish’s term for the “real” web servers from which it caches content. You can have one or several, local or remote.

backend default {

.host = "127.0.0.1";

.port = "8080";

.connect_timeout = 600s;

.first_byte_timeout = 600s;

.between_bytes_timeout = 600s;

.max_connections = 800;

}You can get really fancy with Varnish and stick it in front of many different web servers, and you can have many different Varnish servers all working together to share the load; we need neither of those things here. Nginx and Varnish live on the same server, and Nginx has been modified to listen for HTTP traffic on port 8080. This is where I specify that, along with some defaults.

Other housekeeping

# Import Varnish Standard Module so I can serve custom error pages

import std;

# Set who is allowed to purge

acl purge {

"localhost";

"10.10.10.0"/24;

}The first config directive is new to Varnish version 3, and adds a Varnish mod called the Varnish Standard Module. This module is versatile and does lots of stuff, but I use it explicitly a bit later in the config to enable Varnish to access the file system in order to serve up a non-standard error page, which is stored as an HTML file. Without this, you either use the standard Varnish error page or you insert HTML directly into the VCL file (or resort to an ugly hack).

The next part is probably worth more detail than I’m going to spend on it: we establish an access control list, named purge, then add both localhost and my LAN segment to it. The ACL itself has no permissions or actions assigned to it, but we’re going to reference it in the next part of the config. If you wanted to, you could construct lots of different ACLs and use them to assign permissions—you could segregate access to portions of your web site by host or IP address, for example, or restrict who is allowed to use POST actions. The system is quite flexible.

sub vcl_recv

Next are the main Varnish subroutines, which control what Varnish does to network traffic at all the different stages of interaction with that traffic. The first up is vcl_recv, which tells Varnish what to do with requests it receives from end users as it receives them. Varnish can perform lots and lots of different actions here; this subroutine is used for basic traffic direction (if you have multiple vhosts, for exmaple, you can use this sub to exclude some vhosts from ever being cached) and cookie removal.

As stated in the beginning, cookie removal is important; worse, you can’t be indiscriminate about it, because some cookies are very important. If you’re running a web forum, for example, the forum almost certainly uses cookies to keep track of who’s logged in—can’t get rid of those! On the other hand, cookies set by things like Google Analytics or Piwik or Open Web Analytics can absolutely be removed, since they don’t really do anything from the web server’s perspective—all their magic is done on the client side.

sub vcl_recv {

# Ignore all "POST" requests - nothing cacheable there

if (req.request == "POST") {

return (pass);

}

# In the event of a backend overload (HA!),

# serve stale objects for up to two minutes

set req.grace = 2m;

# Ignore all traffic to analytics.bigdinosaur.org,

# since that's all internal

if (req.http.host ~"analytics.bigdinosaur.org") {

return (pass);

}

# Vanilla forum-specific items

#

# Ignore Vanilla "WhosOnline" plugin chatter

if (req.url ~ "/plugin/imonline") {

return (pass);

}

# Ignore Vanilla Analytics

if (req.url ~ "/settings/analyticstick.json") {

return (pass);

}

# Ignore Vanilla notification popups

if (req.url ~ "/dashboard/notifications/inform") {

return (pass);

}

# Ignore Minecraft Overviewer playermarkers

if (req.url ~ "/markers.json(\?[0-9]+)?$") {

return (pass);

}

# Remove cookies from most kinds of static objects, since we want

# all of these things to be cached whenever possible - images,

# html, JS, CSS, web fonts, and flash. There's also a regex on the

# end to catch appended version numbers.

if (req.url ~ "(?i)\.(png|gif|jpeg|jpg|ico|swf|css|js|html|htm|woff|

ttf|eot|svg)(\?[a-zA-Z0-9\=\.\-]+)?$") {

remove req.http.Cookie;

}

# Use the "purge" ACL we set earlier to allow purging from the LAN

if (req.request == "PURGE") {

if (!client.ip ~ purge) {

error 405 "Not allowed.";

}

return (lookup);

}

# Wordpress - strip all cookies for everything except the login page

# and the admin section, because Wordpress adds cookies EVERYWHERE

if (req.http.host ~ "littlel.bigdinosaur.org") {

if (req.url ~ "wp-(login|admin)") {

return (pass);

}

}

# Remove Google Analytics and Piwik cookies everywhere

if (req.http.Cookie) {

set req.http.Cookie = regsuball(req.http.Cookie, "(^|;\s*)(__[a-z]+|has_js)=[^;]*", "");

set req.http.Cookie = regsuball(req.http.Cookie, "(^|;\s*)(_pk_(ses|id)[\.a-z0-9]*)=[^;]*", "");

}

if (req.http.Cookie == "") {

remove req.http.Cookie;

}

# Tell Varnish to use X-Forwarded-For, to set "real"

# IP addresses on all requests

remove req.http.X-Forwarded-For;

set req.http.X-Forwarded-For = req.http.rlnclientipaddr;

}That’s a big damn log block of code, but fortunately that’s also the longest sub. Most of the lines in it are explained in the comments, but let’s take a closer look at a few of them.

set req.grace = 2m;No matter how badass your web server is, it still has to generate its dynamic content, and that can take time. With one or two users requesting stuff, that’s never a problem; with 50,000 folks all wanting to read your new loltastic Wordpress post because it got linked on the front page of Reddit, that can be an issue. To fight the sudden rushes or traffic, Varnish has a feature called grace mode, which lets it send a request for updated content to the backend web server, but reply to pending user requests with the “stale” current version until the web server spits out the new content. It’s definitely better to serve stale content than have your web site puke out an error!

This statement in vcl_recv must be matched with another statement in vcl_fetch, which we’ll get to shortly; the otherwise, it won’t do anything.

if (req.http.host ~"analytics.bigdinosaur.org") {

return (pass);

}One of the vhosts on the BigDino web server, Analytics, hosts my Piwik instance. Caching traffic from an analytics server is obviously counterproductive; for one, it’s private and low-traffic, and for another, you always want the most recent results from analytics software. You can use a similar statement to exclude any private vhosts you might have running.

After this, I have some lines for Vanilla, the web forum application I’m using to host the Chronicles of George forums. If you’ve come here looking for a Vanilla-specific Varnish config, you’ll llikely want to include these lines!

Then there’s a line to ignore requests about the Minecraft Overviewer JSON file used to show player positions on the BigDino Minecraft map, and then we get into the really important part: the part with the cookies.

if (req.url ~ "(?i)\.(png|gif|jpeg|jpg|ico|swf|css|js|html|htm|woff|ttf|eot|

svg)(\?[a-zA-Z0-9\=\.\-]+)?$") {

remove req.http.Cookie;

}This part’s kind of a big deal. Here we check for cookies attached to static objects, like images and CSS files, and strip them out. There’s all kinds of reasons you might be setting cookies on static files, but generally those reasons have to do with tracking who clicks on what when, and that kind of stuff is better handled by a smart analytics package. Anything with cookies attached to it is ignored by Varnish, and so we need them gone.

There’s also a regex on the end of that statement. Some web apps (like Vanilla!) attach version strings to the end of CSS and JS files when they serve them out. Varnish treats an object’s entire URL as part of that object’s unique identifier, so custom.css and custom.css?v=1.25 would be treated as two different files; worse, the string on the end would make it not match a regex looking just at the file extension.

if (req.request == "PURGE") {

if (!client.ip ~ purge) {

error 405 "Not allowed.";

}

return (lookup);

}Here’s where we use that “purge” ACL we built earlier. Sometimes you need to expunge an item from cache before it naturally expires. Varnish has a complex system to do this using things called bans, which frankly even after reading the documentation a dozen times is still kind of confusing to me in a lot of ways, since it doesn’t appear to actually remove objects but instead just bloat your cache. However, you can quickly remove single objects from cache using curl run from a system which matches your purge ACL, like this:

$ curl -X PURGE http://your.site.com/thingtopurge.htmlThe -X switch tells curl to use a request method called “PURGE”, which isn’t a standard HTTP request, but which we’ve just explicitly defined. On receiving a “PURGE” request, assuming the host sending the request is in the ACL, Varnish routes the request through its “lookup” response, which causes some more actions we’ll get to in a bit.

Next is a quick Wordpress section, which strips all cookies except those used to log in to the admin section. Wordpress shits all kinds of cookies all over the place, and unless you’re heavily using Wordpress comments, you don’t need any of them.

Then, we remove cookies for Google Analytics and Piwik. These lines simply look for cookies which match the common cookie names for GA and Piwik and remove them. Since the cookies are already set on the client side and they do all their work there, they don’t need to be touched by our web server.

This next part is extremely important:

# Tell Varnish to use X-Forwarded-For, to set "real"

# IP addresses on all requests

remove req.http.X-Forwarded-For;

set req.http.X-Forwarded-For = req.http.rlnclientipaddr;Remember that Varnish sits in front of your web server and forwards requests to it. Without some extra help, your web server would see every single request it gets as originating not from the Internet, but rather directly from the Varnish server, with the Varnish server’s IP address (which, for us in this config, is localhost). That’s bad. Fortunately, we can tell Varnish to set an HTTP header called X-Forwarded-For which contains the actual client’s IP address. We have to do this in several places; this is the first and most important. This two-line statement strips any existing X-Forwarded-For header and replaces it with a new, fresh one.

In order to make your web server actually see and understand the header, you might need to alter your web server config slighty. For Nginx, this is easily done by adding the following lines in the http section of /etc/nginx/nginx.conf:

# Add support for real IP addresses when receiving traffic from Varnish

set_real_ip_from 127.0.0.1;

real_ip_header X-Forwarded-For;This tells Nginx to accept X-Forwarded-For headers on traffic from 127.0.0.1, which is where Varnish is installed. If you had Varnish on one or more separate servers, you’d obviously use those addresses there. Note that for this to work Nginx must have been compiled with the http_realip_module, so if you’re using the nginx-light package on Ubuntu, you’ll need to update it to nginx-full.

sub vcl_pass and sub vcl_pipe

The next subroutine, vcl_pass, tells Varnish what to do with traffic that doesn’t get cached at all. The “pass” subroutine contains actions that Varnish will execute on traffic that is passed directly from the user on to the backend without entering the cache. “Pipe” is similar, but is more suited for streaming large objects, since Varnish doesn’t attempt to distinguish individual objects passing after the first. Generally, “pipe” is moving toward being deprecated and “pass” is the preferred thing to use; I give them the same settings and use “pass” unless I have a good reason not to (which, so far, is never).

sub vcl_pass {

set bereq.http.connection = "close";

if (req.http.X-Forwarded-For) {

set bereq.http.X-Forwarded-For = req.http.X-Forwarded-For;

}

else {

set bereq.http.X-Forwarded-For = regsub(client.ip, ":.*", "");

}

}sub vcl_pipe {

set bereq.http.connection = "close";

if (req.http.X-Forwarded-For) {

set bereq.http.X-Forwarded-For = req.http.X-Forwarded-For;

}

else {

set bereq.http.X-Forwarded-For = regsub(client.ip, ":.*", "");

}

}In both sections, the first line ensures that the backend HTTP requests are closed as soon as possible. This is important because otherwise the same backend connection can be inadvertently used to deliver data to different front-end (user) connections, which might result in unexpected behavior.

The next part, with the if/else statement, continues our use of the X-Forwarded-For header. Here we check and see if the request from the user has one; if it does, Varnish sets the same header on the backend request it passes on, and if it doens’t have one, Varnish creates one for the backend request using the original client’s IP address. Without these lines, passed & piped traffic would lack the correct headers and would all show up in the backend web server’s logs as originating from 127.0.0.1.

sub vcl_fetch

The next subroutine, vcl_fetch, tells Varnish what to do with an object when it’s been retrieved from the backend but before it gets served to the client. This is where we can do a bit more manipulation on the stuff our web server does in order to make it more cache-friendly.

sub vcl_fetch {

set beresp.grace = 2m;

if (req.http.host ~ "littlel.bigdinosaur.org") {

if (!(req.url ~ "wp-(login|admin)")) {

remove beresp.http.set-cookie;

}

}

# Strip cookies before static items are inserted into cache.

if (req.url ~ "\.(png|gif|jpg|swf|css|js|ico|html|htm|woff|eof|ttf|svg)$") {

remove beresp.http.set-cookie;

}

# Adjusting Varnish's caching - hold on to all cachable objects for

# 24 hours Objects declared explicibly as uncacheable are held for

# 60 seconds, which helps in the event of a sudden ridiculous rush

# of traffic. Initial exceptions are set for the CoG content, which

# should be cached essentially forever, and the Overviewer map stuff.

if (req.http.host ~ "www.chroniclesofgeorge.com") {

set beresp.ttl = 1008h;

}

elseif (req.url ~ "map-newworld") {

set beresp.ttl = 120s;

}

else {

if (beresp.ttl < 24h) {

if (beresp.http.Cache-Control ~ "(private|no-cache|no-store)") {

set beresp.ttl = 60s;

}

else {

set beresp.ttl = 24h;

}

}

}

}Three major things happening here. First, at the top, we’re supplying the other setting necessary to make Grace Mode function correctly, by placing a two minute “grace” time-to-live stamp on everything. This is how long Varnish will keep objects in its cache after their TTL has expired (whereas the other setting in vcl_recv controls how long Varnish will use grace mode on an object).

Next, we’re wearing pants with our suspenders for cookie stripping, first yanking out any cookies Wordpress tries to set on anything other than the admin area and then ensuring any cookies the web server sets on images and other static things get removed before the objects are placed into cache.

Finally, and most importantly, we set how long objects should stay in cache. This is surprisingly difficult to do “correctly” (i.e., in compliance with IETF RFC 2616. There’s a good explanation here on exactly why this is, but the simple version is that while there’s an established way to tell a web browser how long to keep stuff in its disk cache, there’s no real established method to tell a reverse-proxy cache like Varnish how to do the same thing. There’s no separate “backend cache” attribute to set. So, it hijacks the web server’s TTL on objects, on the assumption the browser cache TTL provides a pretty good sketch of how long an object should be cached.

There’s no separate “backend cache” attribute to set.

There are prolems with this, though. A badly-coded web app could set no-cache attributes on objects it serves, which would make those things not be cached at all; plus sometimes it’s just annoyingly difficult to deal with defining TTLs on the web server. So, here, I’ve set up an overriding TTL on all my stuff. Content for the Chronicles of George (which will never change) has a very long TTL; content for the Minecraft map (updated every other hour) has a short TTL, and everything else gets its TTL bumped to at least 24 hours.

The exception is if an object has a no-cache or similar attribute set. I’ve elected to respect that, but to also cache the object for sixty seconds just in case. This is to ensure that as much as possible gets held in cache, no matter how small, to offload as much work as possible onto Varnish.

sub vcl_hit and vcl_deliver

The next two subs determine what actions Varnish will take if an object is or isn’t present in its cache. They are set identically below, and each contain the actual action steps necessary to purge content.

sub vcl_hit {

if (req.request == "PURGE") {

purge;

error 200 "Purged.";

}

}vcl_hit lets you define actions for what happens if Varnish finds that the object it’s looking for is already in its cache (a “cache hit”). I use it to actually instruct Varnish to purge objects when directed. The previous purge statement in vcl_recv checks the requester against the ACL and conducts a lookup, and if the object is in cache, this is where the actual purging is done. There are of course other actions you can define here, but I’m not using this for anything else right now.

sub vcl_miss {

if (req.request == "PURGE") {

purge;

error 200 "Purged.";

}

}Contrary to vcl_hit, vcl_miss is where Varnish looks if it doesn’t find what it’s looking for in cache. There’s an obvious semantic disconnect here—why purge a thing in the subroutine dealing with cache misses? The documentation on cache invalidation makes the point that Varnish stores different versions of an object (like its Vary: variants, if applicable) as different cache entries. Adding a “purge” statement in vcl_miss ensures that all variants of the same named object are purged.

sub vcl_error

We’re almost done. The penultimate secction is where we define a custom error message. You can leave this section empty if you don’t mind serving up Varnish’s standard error message; I prefer something that fits with my web site’s theme, so I use the file-reading capability imparted by the Varnish Standard module imported at the beginning of the VCL file and serve up my own error.

sub vcl_error {

set obj.http.Content-Type = "text/html; charset=utf-8";

set obj.http.MyError = std.fileread("/var/www/error/varnisherr.html");

synthetic obj.http.MyError;

return(deliver);

}This is a little weird in execution, because we can’t actually serve a file—we have to fake it. This sets the right content type on the object being served, sets a custom header called MyError on the object, then uses the synthetic function to conjure up an actual body in the response, instead of just a header, and stuffs the contents of the MyError header into the body. The whole mess is then routed to “deliver”, which is our last section.

You can do all kinds of interesting things in vcl_error, but the stuff most people will be interested in are error-based redirects. The Varnish docs have some good examples to build on.

sub vcl_deliver

The final section, allowing Varnish to take actions on content as it’s being delivered. Primarily used to remove or set HTTP headers just the way you want them.

sub vcl_deliver {

# Display hit/miss info

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

# set resp.http.X-Cache-Hits = obj.hits;

}

else {

set resp.http.X-Cache = "MISS";

}

# Remove the Varnish header

remove resp.http.X-Varnish;

# Display my header

set resp.http.X-Are-Dinosaurs-Awesome = "HELL YES";

# Remove custom error header

remove resp.http.MyError;

return (deliver);

}The first part is extremly useful in troubleshooting whether or not Varnish is actually serving an object out of its cache, or from the backend. It sets a custom header (X-Cache) on every single thing served, and the header will show “HIT” if it’s come from cache or “MISS” if it’s come straight from the backend. There’s a line below that (which I’ve commented out) to show the number of times that object has been served from cache as well, if you’d care to see it. This is just about the most helpful tool there is when trying to figure out if you’ve got your Varnish rules set up correctly and the things you expect to be coming from cache are actually coming from cache!

Varnish also by default sets an X-Varnish header showing some stuff I don’t care about; I removed it to see how removing headers works, and I’ve left it out. Similarly, below that I’ve inserted a custom header on everything that Varnish serves out. I put it in there originally to try it out, and I’ve kept it in because, yes, dinosaurs are awesome.

The very last thing deals with cleaning up the display of my custom error message by removing the MyError I’ve set. This is necessary because the header contains HTML, and a header stuffed full of HTML confuses the crap out of most browsers. The header has already been used to create the contents of the error message’s synthetic request body and we don’t need it anymore, so here’s where we get rid of it.

Bringing it all together

That took a lot longer than expected to write down. Wow. Okay, so, if you want a copy of the full production VCL file I’m using, you can grab it here. The comments are a bit different than in the blog post, but the config is identical to what’s been outlined here. The config described in this post is the result of a week or two of Googling and experimenting; I broke my site more than once trying to figure this all out, as the long-suffering members of the CoG forums can attest. The end result, though, seems to be working very well. Static objects are cached, leaving Nginx and the rest of the web server stack free to deal with the generation of static objects, and the end-user experience is (hopefully) quick and painless for static and dynamic stuff alike.

Please let me know in the comments if there are any errors or omissions in the VCL file. If there’s anything I’m doing wrong, or anything I should be doing that I’m not, I’d love to hear about it!